- #Intellij jar for mapreduce install

- #Intellij jar for mapreduce update

- #Intellij jar for mapreduce code

#Intellij jar for mapreduce code

You can also use the same Helloworld code of Java Spark. There is as such no difference between the java code for the Databricks and the normal SPARK java code. Let’s have a small azure Databricks java example.

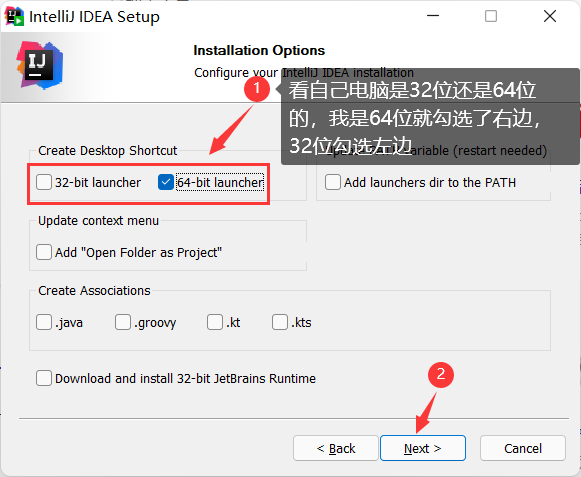

#Intellij jar for mapreduce install

pip install -U "databricks-connect=7.3.*" # or X.Y.* to match your cluster version.ĭatabricks-connect configure Azure Databricks Java Example After you have downloaded the correct version and untar the libraries. Make sure for the version mrunit-X.X.X-incubating-hadoop1.jar is for MapReduce version 1 of Hadoop and mrunit-X.X.X-incubating-hadoop2.jar is for working the new version of Hadoop’s MapReduce. The client does not support Java 11.Ĭommand to install the Databricks connect and configure it. Goto and download the appropriate MRUnit released version.

#Intellij jar for mapreduce update

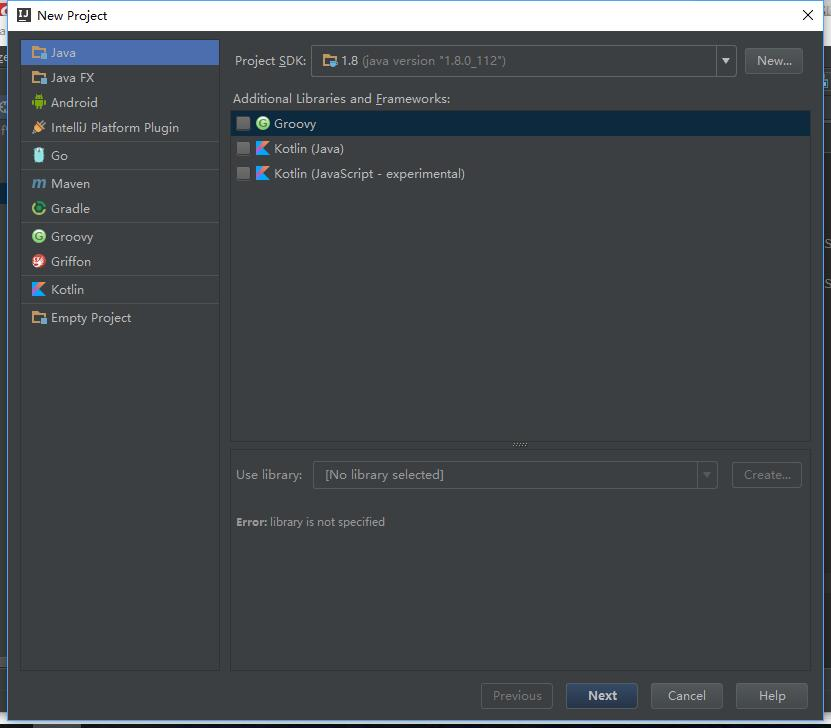

The client has been tested with the OpenJDK 8 JRE. Force update if already exists: specifies whether to overwrite an existing JAR package or function in the destination MaxCompute project that has the same name. It required Java Runtime Environment (JRE) 8. It allows connecting IDE like Eclipse, IntelliJ, P圜harm, RStudio, Visual Studio to Databricks clusters. Once you do the configuration then it will be a very smooth way to execute the Java spark code on Databricks cluster.ĭatabricks connect is the tool provided by Databricks Inc to integrate your local environment to run the workload over the Databricks cluster directly. In this approach there is no need to extract the java code as a jar. But we intend to make all the '-D'-prefix argument as runtime hadoop parameters, the following way of writing MapReduce task entry is not working, because argument ''. Use these java classes and execute the SCALA notebook.įigure1: Upload java jar file as library Approach 2: Using Databricks connect Now you can have one notebook which is a SCALA notebook, there you can import the java classes from the libraries. After that you can go to the Databricks cluster and go to the libraries and upload the jar file as a library there Configuring the Classpath Bundling up the Accumulo dependencies with a client JAR is discouraged, because it can.

Once you do the configuration then it will be a very smooth way to execute the Java spark code on Databricks cluster.

In this approach there is no need to extract the java code as a jar. In the jar file your whole code will be there. Figure1: Upload java jar file as library Approach 2: Using Databricks connect. When you have java code for spark what you can do is, you can export the java code as an executable jar.

0 kommentar(er)

0 kommentar(er)